Is AI's Proliferation Moving us into a WALL-E Dystopia?

One educator's attempt to carve a path between the AI evangelists and pessimists.

One of the joys of having a four-year-old is the opportunity to revisit classic kids’ films. On a recent Friday night, our family chose Pixar’s WALL-E, a movie that’s somehow nearly 17 years old.

Set in a distant future, Earth has been abandoned under the weight of its own waste. WALL-E, the film’s rusted, ever-curious robot protagonist, roams the barren landscape, methodically collecting garbage to curate a museum of past human life on that once-vibrant planet.

After a beautifully silent 37 minutes without dialogue (something I suspect I enjoyed more than Fitz), WALL-E is suddenly catapulted into space, landing aboard Axiom, the spaceship where humans have escaped their ruined world. Back on Earth, WALL-E had long seen Axiom’s now-dated advertisements play on repeat—glossy visions of a utopia where technology delivers everything people want, exactly when they want it, in a perfectly customized environment.

What he finds, however, is something radically different: sloth, unchecked consumption, and a society of humans physically and mentally atrophied by their own comfort. If Earth’s wasteland was bleak, this version of the future isn’t any better.

Released nearly 15 years before ChatGPT landed on our desktops, WALL-E feels like a modern Rorschach test for how we perceive technological progress. Is an AI-driven future closer to the hopeful ads WALL-E saw back on Earth, or is it more like the world he finds once he arrives?

On any given day, I find myself wavering between these two visions of what’s to come.

The Growing Role of AI in Higher Education

I’m sitting at the Amsterdam airport on my way home after spending a week in India. I had been in Mumbai, teaching and learning alongside 40 Executive MBAs as part of a leadership course in partnership with IIT-Bombay.

A week before my trip, a student emailed me with a question: Would I address the role of generative AI in leadership? Scanning my assigned readings, I quickly realized that none directly tackled the topic. Reviewing the previous year’s slides, I found the same gap.

In some ways, this oversight makes sense. Two years ago, I knew astonishingly little about ChatGPT. It had just launched the previous fall, and I hadn’t integrated AI into my work in any meaningful way. Today, though, it’s hard to imagine standing in front of a room full of executives without addressing it. The world has changed—and is changing—fast.

So, how does AI shift the context of leadership? And, more specifically to this blog, how might we consider it in the context of a rich life?

From my perspective as an educator, a few things stand out. Over the past 24 months, I’ve noticed that the overall quality of research-informed writing in my classes is both improving and homogenizing. The connecting “and” is the crucial word.

On one hand, AI-generated responses often lead to more informed writing and sharper analysis. A well-crafted prompt—like the one below on leadership—can yield thoughtful, intelligent takes on a topic. In many cases, it serves as a helpful corrective to the muddled thinking and sloppy writing that sometimes emerge in undergraduate and graduate submissions.

AND….. at their worst, these tools have a homogenizing effect on the output. On the final leg of my flight back, I finished grading 30 case submissions for another class I had taught this spring. It’s hard to overstate just how similar they are all starting to sound. We bold the same words, italicize the same concepts, and cite an increasingly similar set of references. Most concerningly, our perspectives are becoming more aligned—sometimes to the point of being bland.

At their best, these tools produce writing that is more informed and well-researched. At their worst, the submissions lack soul, voice, and personality.

Point and Counter-Point on Individual Consequences

Let me share two recent studies on AI that get to the heart of the issue.

The first study, conducted by a group of business school academics in partnership with the Boston Consulting Group, examined the impact of AI on consulting work. In this project, the researchers provided a group of consultants with access to ChatGPT and comprehensive training on how to use it effectively.

Their findings seem like a textbook case on the benefits of equipping individuals with the best tools. On average, consultants with AI access completed 12.2% more tasks and worked 25.1% faster than those without it. Even more striking, the quality of their work improved by over 40% when AI was integrated into the process.

But the most interesting insight was their argument about the frontier of tasks—some where AI outperforms humans and others where it still falls short. When AI was used on tasks beyond this conceptual human frontier—those requiring uniquely human judgment or expertise—consultants relying on AI were 19 percentage points less likely to produce correct solutions than those working without it.

In other words, the effectiveness of AI depends on the nature of the task itself.

But the frontier is not stagnant. This paper, after all, was published in 2023. Even now, just two years later, tasks once better performed by humans have increasingly moved into AI’s domain. And given the pace of technological progress, this shift will likely continue at a rate that is difficult for many of us to fully grasp.

A second study published earlier this year—first brought to my attention through a thoughtful write-up by Erik Hoel—is an important counterbalance to this optimism.

Conducted by a team of researchers, many from Microsoft, the study examines how heavy users of AI engage in the cognitive tasks central to the role of critical thinking. Their key finding? The more confidence users have in generative AI, the less they engage in such critical reasoning, as shown on the six key dimensions below. In other words, many users are leaning too heavily on AI outputs, often without sufficient scrutiny.

Take these two studies together, and you are left with a puzzle. On one hand, our ability to get things done has never been stronger. With access to a smartphone, anyone can tap into an unprecedented power of pattern recognition applied to the vast world of publicly available knowledge. On the other hand, our language is becoming more uniform, our expression more homogenized, and our critical thinking skills may be at risk of atrophying.

As a leader in higher education, I can’t help but see this as a puzzle that demands both an answer and an institutional response.

Where do we go from here?

So, how does this all tie back to the Pixar classic? Is your vision of the future closer to Anxiom’s initial pitch of our technological future or the lived experience that WALL-E encounters on his visit?

While I am by no means an expert, I want to share my working theory on this in the hope that you will do the same.

First, as an educator, I increasingly worry about what happens when AI makes it so easy to traffic in summary versus spending time with primary sources. You can think of this as my moment of shaking my fist at “kids these days.”

I’ll give you just a quick example to share what I mean. At three points in class this week, I referenced plays or novels (Junk, How to Get Filthy Rich in Rising Asia, and Tomorrow and Tomorrow and Tomorrow) that have formed my view of the market and leadership in complex ethical situations. Call me old fashioned, but pulling a summary of these works from LLM doesn’t seem to hold a candle to the formation that happens by deep immersion with the texts. But with a summary always just a click away, I worry about our tendency to take the path less difficult and the corresponding impact on both critical thinking and human formation as a result.

Second, I worry that the benefits and drawbacks of these tools will not be distributed equally. WALL-E shows us a vision with all humans capturing the same negative consequences. In contrast, I am sure some of us will benefit a great deal by leveraging these tools. We will gain more expansive access to knowledge and augmented intelligence without losing critical thinking. What I am less sure of is whether these benefits will accrue equally.

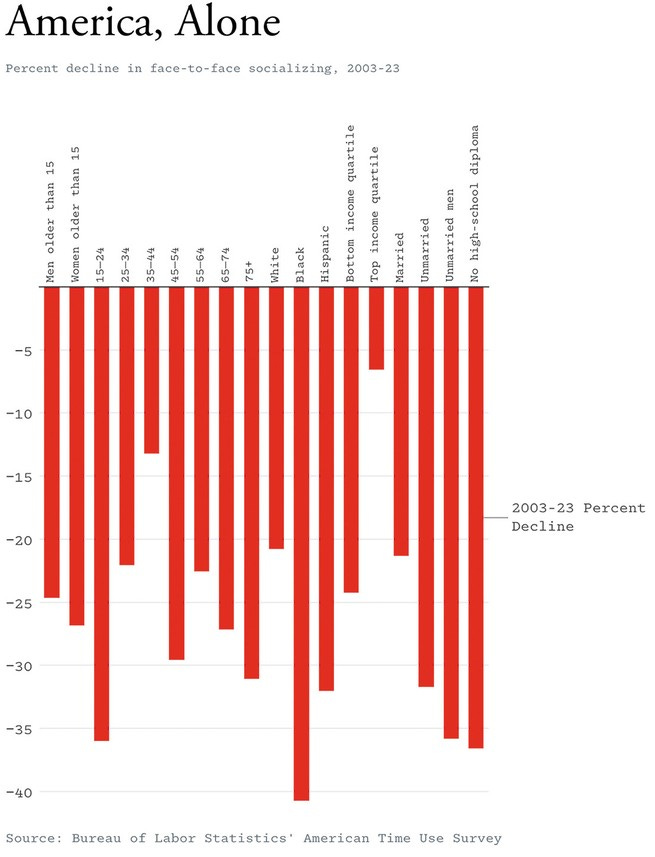

This is often the case with technological progress. Consider the Atlantic's recent cover story on loneliness. While bemoaning the role of technology in driving us to isolation, you can also see in the data the very uneven impact of who is affected. Though we are all getting more lonely, the impact on the bottom income quartile is radically more impacted than the top 1%. Black and Hispanic Americas feel the impact more than whites. Same technologies. Significantly different consequences.

So, where does this leave me as an educator?

It is very clear to me that I need to teach my students—sometimes 18-year-olds and sometimes executives in their 40s and 50s—to effectively use these tools without being overcome by them.

But in practice, this requires very different pedagogical strategies. At times, I ask students to shut down their computers, show me their eyes, and interact with one another instead of a screen. At other times, I request that they pull out their computers and open the most up-to-date model, knowing I need to teach them to use these tools effectively.

Here is one relevant example. In class this week, I shared my use case on AI (shown below if interested) and then asked them to develop their own and share with a peer. For their upcoming final paper, stealing an idea from the economist Tyler Cowan, I am requiring them to both use AI in their paper AND submit how explicitly they did so. The students will be evaluated on the quality of this use case, and I will share all the results of all 40 use cases with one another as a way to aid learning.

But is this me being an educator on the cutting edge or merely moving us closer to Axiom’s dystopia? I am honestly not sure. On a given day, I both love and rely upon a set of tools and worry about what it will do to my thinking, let alone the four-year-old sitting next to me watching WALL-E at the house.

Yet, if we don’t simultaneously explore both the dystopian, WALL-E-like possibilities and the optimistic case for what’s possible, we risk slipping into the naïveté of blind evangelism or knee-jerk pessimism. Finding a balanced path forward is our collective task as we navigate this uncertain future.

FYI, the "Buy n Large Corporation" (AKA "BnL Corp.") is the company who built the Axiom ship (but is also implicated in the "trashing" of the planet). I can hear the jingle from the movie in my head. :) Great and thoughtful piece!